At this year’s EA Global London conference, Jan Leike and I ran a discussion session on the machine learning approach to AI safety. We explored some of the assumptions and considerations that come up as we reflect on different research agendas. Slides for the discussion can be found here.

The discussion focused on two topics. The first topic examined assumptions made by the ML safety approach as a whole, based on the blog post Conceptual issues in AI safety: the paradigmatic gap. The second topic zoomed into specification problems, which both of us work on, and compared our approaches to these problems.

Assumptions in ML safety

The ML safety approach focuses on safety problems that can be expected to arise for advanced AI and can be investigated in current AI systems. This is distinct from the foundations approach, which considers safety problems that can be expected to arise for superintelligent AI, and develops theoretical approaches to understanding and solving these problems from first principles.

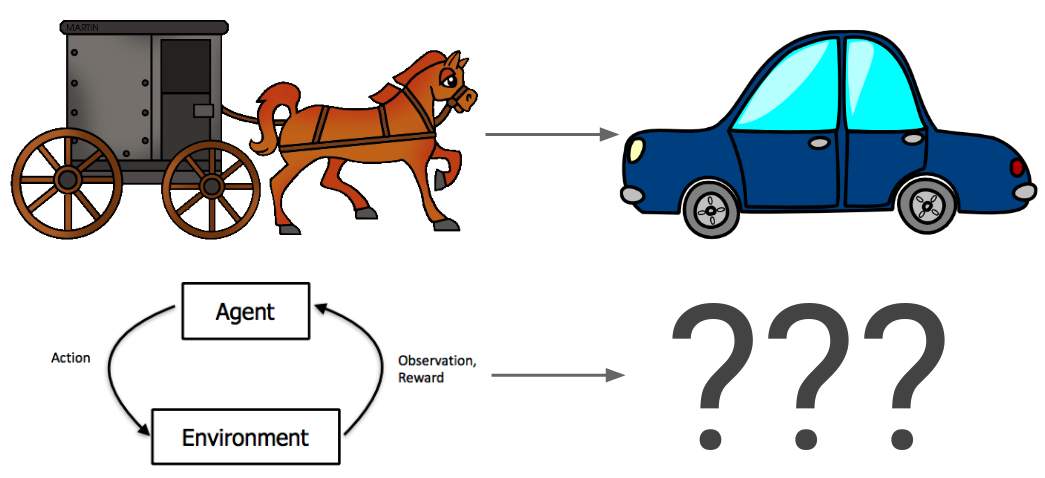

While testing on current systems provides a useful empirical feedback loop, there is a concern that the resulting solutions might not be relevant for more advanced systems, which could potentially be very different from current ones. The Paradigmatic Gap post made an analogy between trying to solve safety problems with general AI using today’s systems as a model, and trying to solve safety problems with cars in the horse and buggy era. The horse to car transition is an example of a paradigm shift that renders many current issues irrelevant (e.g. horse waste and carcasses in the streets) and introduces new ones (e.g. air pollution). A paradigm shift of that scale in AI would be deep learning or reinforcement learning becoming obsolete.

(image credits: horse, car, diagram)

If we imagine living in the horse carriage era, could we usefully consider possible safety issues in future transportation? We could invent brakes and stop lights to prevent collisions between horse carriages, or seat belts to protect people in those collisions, which would be relevant for cars too. Jan pointed out that we could consider a hypothetical scenario with super-fast horses and come up with corresponding safety measures (e.g. pedestrian-free highways). Of course, we might also consider possible negative effects on the human body from moving at high speeds, which turned out not to be an issue. When trying to predict problems with powerful future technology, some of the concerns are likely to be unfounded – this seems like a reasonable price to pay for being proactive on the concerns that do pan out.

Getting back to machine learning, the Paradigmatic Gap post had a handy list of assumptions that could potentially lead to paradigm shifts in the future. We went through this list, and rated each of them based on how much we think ML safety work is relying on it and how likely it is to hold up for general AI systems (on a 1-10 scale). These ratings were based on a quick intuitive judgment rather than prolonged reflection, and are not set in stone.

| Assumption | Reliance (V) | Reliance (J) | Hold up (V) | Hold up (J) |

| 1. Train/test regime | 3 | 2 | 2 | 3 |

| 2. Reinforcement learning | 9 | 9 | 9 | 8 |

| 3. Markov Decision Processes (MDPs) | 2 | 2 | 1 | 2 |

| 4. Stationarity / IID data sampling | 1 | 2 | 1 | 1 |

| 5. RL agents with discrete action spaces | 7 | 8 | 2 | 5 |

| 6. RL agents with pre-determined action spaces | 6 | 9 | 5 | 5 |

| 7. Gradient-based learning / local parameter search | 2 | 3 | 4 | 7 |

| 8. (Purely) parametric models | 2 | 3 | 5 | 3 |

| 9. The notion of discrete “tasks” or “objectives” that systems optimize | 4 | 10 | 6 | 8 |

| 10. Probabilistic inference as a framework for learning and inference | 4 | 8 | 9 | 7 |

Our ratings agreed on most of the assumptions:

- We strongly rely on reinforcement learning, defined as the general framework where an agent interacts with an environment and receives some sort of reward signal, which also includes methods like evolutionary strategies. We would be very surprised if general AI did not operate in this framework.

- Discrete action spaces (#5) is the only assumption that we strongly rely on but don’t strongly expect to hold up. I would expect effective techniques for discretizing continuous action spaces to be developed in the future, so I’m not sure how much of an issue this is.

- The train/test regime, MDPs and stationarity can be useful for prototyping safety methods but don’t seem difficult to generalize from.

- A significant part of safety work focuses on designing good objective functions for AI systems, and does not depend on properties of the architecture like gradient-based learning and parametric models (#7-8).

We weren’t sure how to interpret some of the assumptions on the list, so our ratings and disagreements on these are not set in stone:

- We interpreted #6 as having a fixed action space where the agent cannot invent new actions.

- Our disagreement about reliance on #9 was probably due to different interpretations of what it means to optimize discrete tasks. I interpreted it as the agent being trained from scratch for specific tasks, while Jan interpreted it as the agent having some kind of objective (potentially very high-level like “maximize human approval”).

- Not sure what it would mean not to use probabilistic inference or what the alternative could be.

An additional assumption mentioned by the audience is the agent/environment separation. The alternative to this assumption is being explored by MIRI in their work on embedded agents. I think we rely on this a lot, and it’s moderately likely to hold up.

Vishal pointed out a general argument for the current assumptions holding up. If there are many ways to build general AI, then the approaches with a lot of resources and effort behind them are more likely to succeed (assuming that the approach in question could produce general AI in principle).

Approaches to specification problems

Specification problems arise when specifying the objective of the AI system. An objective specification is a proxy for the human designer’s preferences. A misspecified objective that does not match the human’s intention can result in undesirable behaviors that maximize the proxy but don’t solve the goal.

There are two classes of approaches to specification problems – human-in-the-loop (e.g. reward learning or iterated amplification) and problem-specific (e.g. side effects / impact measures or reward corruption theory). Human-in-the-loop approaches are more general and can address many safety problems at once, but it can be difficult to tell when the resulting system has received the right amount and type of human data to produce safe behavior. The two approaches are complementary, and could be combined by using problem-specific solutions as an inductive bias for human-in-the-loop approaches.

We considered some arguments for using pure human-in-the-loop learning and for complementing it with problem-specific approaches, and rated how strong each argument is (on a 1-10 scale):

| Argument for pure human-in-the-loop approaches |

Strength (V) |

Strength (J) |

| 1. Highly capable agents acting effectively in an open-ended environment would likely already have safety-relevant concepts |

7 |

7 |

| 2. Non-adaptive methods can be reward-hacked |

4 |

5 |

| 3. Unknown unknowns may be a big part of the problem landscape |

6 |

8 |

| 4. More likely to be solved in the next 10 years |

5 |

5-7 |

| Argument for complementing with problem-specific approaches |

Strength (V) |

Strength (J) |

| 1. Could provide a useful inductive bias |

9 |

8 |

| 2. Could help reduce the amount of human data needed |

6 |

6 |

| 3. Could provide better understanding of what humans want and more trust in the system avoiding specific classes of behaviors |

9 |

7 |

| 4. Human feedback may not distinguish between objectives and strategies (e.g. disapprove of losing lives in a game) |

5 |

2 |

Our ratings agreed on most of these arguments:

- The strongest reasons to focus on pure human-in-the-loop learning are #1 (getting some safety-relevant concepts for free) and #3 (unknown unknowns).

- The strongest reasons to complement with problem-specific approaches are #1 (useful inductive bias) and #3 (higher understanding and trust).

- Reward hacking is an issue for non-adaptive methods is an issue (e.g. if the reward function is frozen in reward learning), but making problems-specific approaches adaptive does not seem that hard.

The main disagreement was about argument #4 for complementing – Jan expects the objectives/strategies issues to be more easily solvable than I do.

Overall, this was an interesting conversation that helped clarify my understanding of the safety research landscape. This is part of a longer conversation that is very much a work in progress, and we expect to continue discussing and updating our views on these considerations.

Re: inductive bias. I’m not a fan of this idea. Under ideal circumstances, I think your problem-specific approach would fail on a different set of inputs than your human-in-the-loop approach. Using the problem-specific approach as an inductive bias for the human-in-the-loop approach seems like it would make failures in the two approaches more correlated. To minimize the odds of simultaneous failure, you want failure probabilities to be independent.

LikeLike

Thanks John for the interesting comment! I agree that the problem-specific approach would likely fail on a different set of inputs than the human-in-the-loop approach. I would expect that a hybrid approach would combine the strengths of both, resulting in a smaller rather than a larger set of flaws (roughly speaking, an intersection rather than a conjunction). For example, if a reward learning algorithm fails to teach the agent to avoid a certain side effect which is captured by a side effect measure, then I’d expect that adding the side effect measure as an inductive bias would help the reward learning algorithm avoid the side effect. Let me know if you disagree with this intuition, and what alternatives to the hybrid approach you’d expect to work better.

LikeLike

Thanks for the reply! I don’t have a lot of familiarity with deep RL and it’s possible I’m missing something big. I’ll try to explain my intuition using metaphors from vanilla machine learning.

When we handcraft a detector for an unwanted side effect, there is some sense in which our brains are operating like a machine learning algorithm. We have examples of actions which trigger the unwanted side effect and examples of actions which don’t. Using our imagination, we search through the space of possible models & try to discover a simple classifier with high accuracy.

A standard way to improve accuracy is to use multiple algorithms in an ensemble. When you create an ensemble, you’d like the classifiers in the ensemble to be as different as possible (while still remaining individually accurate)–this is my point about correlated failures above. So if we can use our handcrafted detector in parallel with a non-handcrafted machine learning model that looks very different, that’s a win.

When you talk about inductive bias, I think of telling the ML algorithm to start its search through model-space in the same region that the human imagination already discovered a promising model. But from the perspective of independent failure probabilities, I’d prefer to see the machine learning algorithm discover an entirely different model for detecting this side effect–assuming the entirely new model is also very accurate.

If our stock of examples is small, maybe we could automatically generate new examples, classify them using our handcrafted detector, and add them to our stock of examples with a reduced weight (to account for the possibility that our handcrafted detector misclassified them). That seems like it could help overcome the lack of data problem while preserving a chance that the machine learning algorithm discovers a genuinely new model for thinking about the presence/absence of the side effect.

To extend this thinking further, you could imagine training an entire ensemble of side effect detectors simultaneously (maybe adding terms to their loss functions that nudge them towards classifying autogenerated examples differently?) and do active learning. Come up with dozens and dozens of dissimilar yet plausible hypotheses for what it means to avoid this particular side effect, then repeatedly ask humans about cases the hypotheses are divided on in order to clarify which hypotheses are closest to being correct. You could do multiple iterations of this as your stock of labeled data expanded. This general approach could also be helpful for solving the alignment problem.

I guess there’s no real reason not to take the inductive bias approach too, especially if we want our ensemble to be as large as possible… it does seem plausible that it would catch something the other members of the ensemble miss.

LikeLike

Pingback: Import AI 119: How to benefit AI research in Africa; German politician calls for billions in spending to prevent country being left behind; and using deep learning to spot thefts | Import AI

Pingback: Safety and “AI Safety” | The Abnormal Distribution

Pingback: 2018-19 New Year review | Victoria Krakovna